So, I’m implementing and testing analog I/O. I Wired together one I/O point on two Beckhoff cards, a 2Ch ±10V EL4032 (output) and a 2Ch ±10V EL3002 (input).

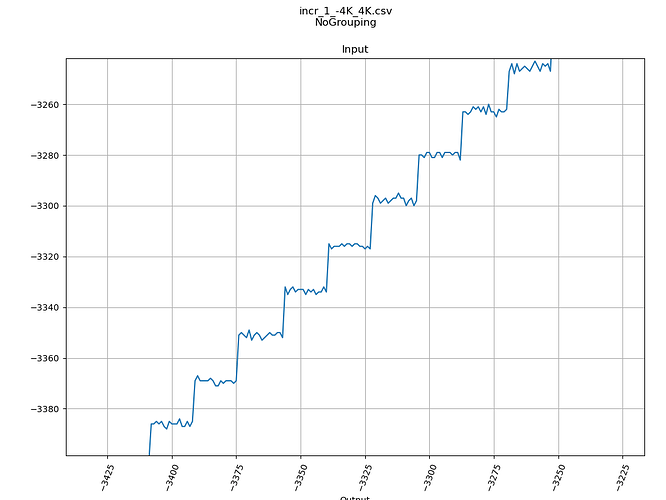

In one test, I walk through various values of the output, verifying the new value, then sampling the input (averaging 5 successive samples) after each one. When I go fast enough, I will frequently see an instance where the test fails because the output value isn’t (quite) what I set it to be.

I started sampling the I/O points in the motion scope, (with an additional 0.5 sec delay between iterations) to see if I could see anything going on.

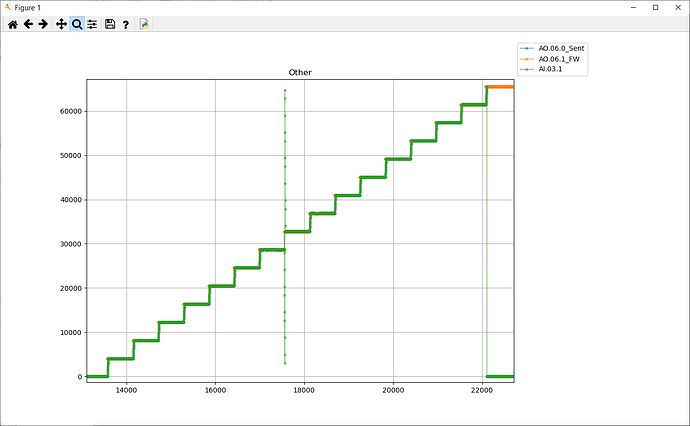

When I step 4096 counts at a time, from 0 - 65535, This is what I observe (in the scope).

Mostly, good, except for that one spot in the middle.

Delays in Response

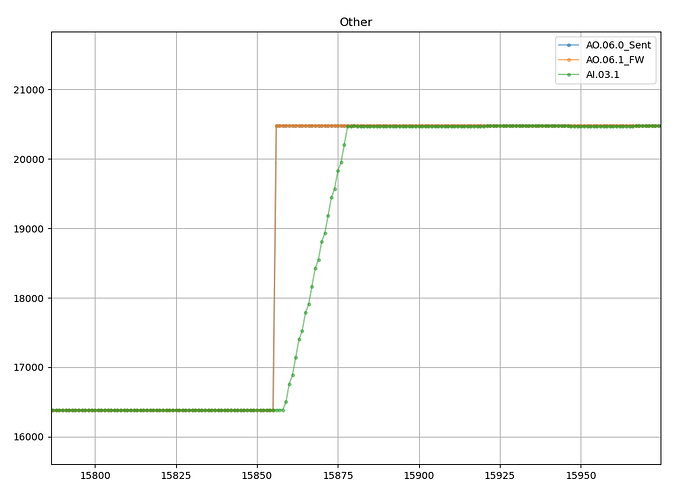

If I zoom in on (any) one of the other steps, I see this.

Question #1: Can you hypothesize about why the input gradually changes over 20ms instead of jumping to the “expected” value?

My best guess is that “one does not simply change voltage” immediately, though 20ms seems like a long time to take to do it. Is this just the behavior of analog I/O cards in general? Do you think it’s a function of the output or the input?

Signed/Unsigned ?

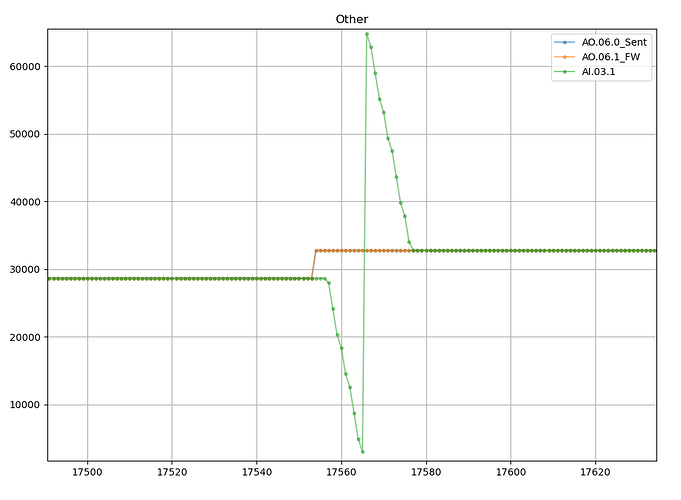

The more troubling component of this is in the middle of the sequence. When I zoom in, I see this.

Conspicuously, this happened between setting the output from 28672 and 32768. This seems like software behavior to me. Naturally, 32768 would be represented as 0x8000, which can also be interpreted as -32768 in a 16-bit integer. So, the “wraparound” from 28K to 32K is sort of understandable, though it’s quite undesirable.

Question #2: Are analog inputs/outputs inherently “signed” (by the RMP API’s and underlying implementation, at least)?

The API for setting outputs takes an int32_t (signed). However, “sign” is only a matter of interpretation.

When I send “unsigned” values, I just cast it as a same-size signed value and call the API.

Are the I/O cards “signed” in the way they interpret the physical input state and report a value to the EtherCAT master?

Can you explain this behavior to me?